Mass loading of new data or violations of the existing procedures of data cleaning lead to abnormal database growth. That is why it is important to monitor and analyze the database growth, as well as for competent management of its structure in the course of normal growth. In this article, we will explain how to analyze these dynamics, what to do in case of abnormal growth, and why it is important to approach object compression and data file compression correctly.

What to do when data files grow

First, monitor the growth of your database. We at «DB Serv» use our monitoring based on Zabbix software to diagnose such problems. We are ready to provide you with a wide range of database administration and support services, including solving problems with normal database growth. In general, it is worth using the following approach to database dynamics.

1. Analyzing the growth dynamics of the database

Dynamics should be tracked locally (last 2-7 days) and globally (3-12 months). The key is to determine whether the growth of data files is normal or abnormal.

● When the database size growth is approximately the same or at least comparable (not exceeding the average values by more than 2-3 times) in equal time intervals it is considered normal growth. This is an inevitable process: all databases grow over time.

● A sharp increase is considered abnormal (exceeds the average values by more than 3 times). It can often be preceded by a long absence of growth as such. In an “abnormal” case, the cause of database growth is usually manual expansion (adding files) or mass insertion of data.

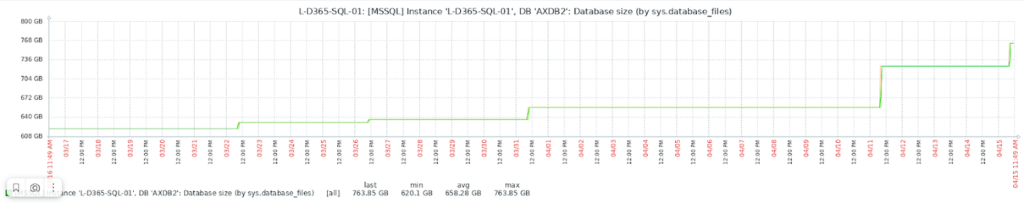

An example of normal database growth:

As can be seen from the screenshot, the observed growth is stepped, but the steps are not sharp. Although there is a significant growth over 30 days, we cannot call it abnormal.

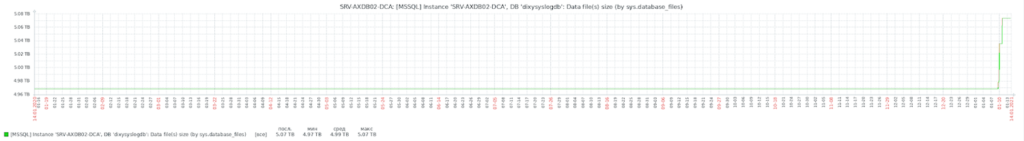

Now let’s look at an example of abnormal growth.

This growth is the result of the manual addition of files and the subsequent increase in their sizes. The graph shows that this happened for the first time in a year. If this is not planned work, it is worth paying attention to it.

2. Determining the growth potential of the database

To determine whether the database has the potential for data growth you will need information about the available database space, files, their location, and the amount of free space on discs. This is done with the help of various scripts and from the graphical interface.

If the database data file cannot grow, there is no free space in it, and attempts to insert new data into it will continue, it will corrupt the database.

In the GUI, you can figure out the potential for database growth in two steps:

1. We get the data on the amount of space in the files and the TOP tables by the occupied size.

2. See the number of data files, their location, and available disc space.

3. Assessment of the situation’s cruciality

Based on all the data obtained above, our experts conclude the criticality of the situation. If the database can grow or there is enough free space for data growth for over a month, we continue the analysis in the current priority.

If you fear, that the disk with the database or transaction log will overflow in less than a month, we recommend urgently escalating the problem. Consider compression of objects and compression of neighboring free data files (if any) as a solution.

Applying compression to objects and compressing data files

Regardless of the conclusions about the nature of the database growth made during the analysis above, it is necessary to take timely measures to preserve its performance. In particular, it is necessary to perform compression.

1. How «DB Serv» analyses data

Often the growth of data files is related to the growth of the data itself: in this situation, only the owner of the data can decide to delete it. Therefore, «DB Serv» does not delete data without permission, but only provides its clients with information about the tables that they need to clean and possibly analyze the database for unused indexes.

We can also analyze the 5-10 largest tables to see if compression can be applied to them (depending on the DBMS versions it can be at the page level or the row level, but mostly compression is applied at the page level in Enterprise edition).

If the DBMS version is 2016 and newer, we check the DBMS edition and its features. For example, Microsoft SQL Server 2019 Standard Edition does not allow you to perform index operations online, but it has started to allow you to do paginated compression, which was previously available only in Enterprise.

You have to pay special attention to the types of data in tables to which you apply compression, as well as the number of rows in them. In the case of large text fields (for example, [varchar](255) or [varchar](510)) or a large number of rows in a table (more than 500 million), the process of applying compression can be extremely demanding on the space in the transaction log (up to ten times the size of the compressed data).

Compression of such objects should also be evaluated from the point of view of possible risks. For this purpose, it is necessary to calculate the compression efficiency.

2. How to calculate compression efficiency

You can see whether compression is applied to a table or not in its properties or via the graphical interface. However, this information is not always accurate, because compression may have a different coefficient on different indexes.

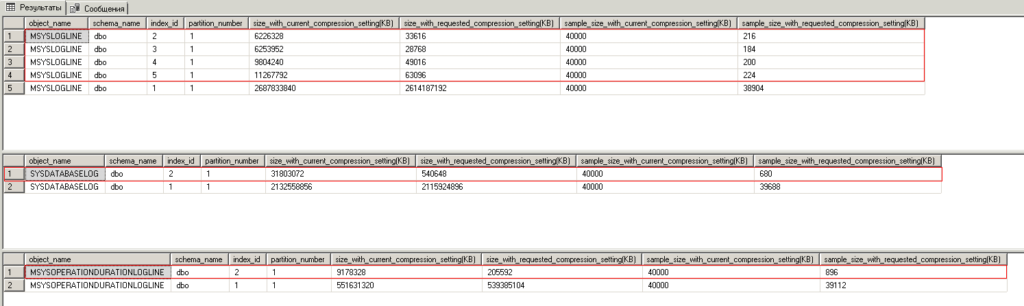

We use a special script for accurate calculation of the compression for a table, by executing which you can get the necessary information.

The screen below shows that compression of non-clustered indexes shows good efficiency, but the data itself (clustered index) is almost not compressed.

Based on the results of the analysis, we can recommend or not recommend the client to use compression for certain tables and indexes.

For example, if by calculation we see that to save 50 GB in a database of about 1 TB we will need to rebuild 5 or more indexes and this will cause the transaction log to grow 2-3 times, we will warn the client against using compression.

3. How to assess the need for file compression

If there is a lot of free space in data files or you released it during a compression application, it may be necessary to compress files.

As a rule, the free space in data files will be filled sooner or later, besides, their compression is not a fast process and strongly affects the performance (index fragmentation increases during compression). If there is about 10% of the initial database size released and it is known that this space will be filled, for example, with data for 2-3 months, compression is not recommended.

However, if you decide to compress data files, you should consider the following:

1. It will take months to compress data files with tables with LOB fields.

2. Compression often creates locks. It is more correct to compress files gradually, “biting off” some parts.

3. If there are several data files and you need to compress or clean some of them, index rebuilding during the compression process can help. The rebuilt objects will be recreated in incompressible files.

Increasing transaction log files

Normally, the transaction log should be a fixed size and should not be more than 80% full. If this limit is exceeded, the transaction log should be analyzed and checked:

1. Its current fullness (it is probably around 100% and the file will continue to grow).

2.The longest transactions.

3.The dates of the last transaction log backup (perhaps there was a backup failure).

4.Status of fault-tolerant or data exchange technologies (if any, the same replication or AAG secondary replica lag may be the reason for not releasing the log).

We can quickly analyze all these four parameters with scripts and procedures of our design.

Compressing the transaction log is a quick task, but we need to make sure that the final size is sufficient and that the file does not start growing again (e.g. due to a suboptimal backup policy).

How to fix a large-size database?

This is a non-trivial task. It is best to entrust it to an experienced professional. We at «DB Serv» have a lot of experience in such work, so we are happy to help your business with analyzing and correcting database growth.